Notice

Recent Posts

Recent Comments

Link

| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | ||||||

| 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 | 21 | 22 |

| 23 | 24 | 25 | 26 | 27 | 28 |

Tags

- HackerRank

- CASE

- 설명의무

- inner join

- NLP

- GRU

- 자연어 논문 리뷰

- 코딩테스트

- SQL 날짜 데이터

- 짝수

- sigmoid

- 서브쿼리

- LSTM

- leetcode

- 자연어처리

- 자연어 논문

- airflow

- Window Function

- MySQL

- 카이제곱분포

- nlp논문

- sql

- update

- torch

- 표준편차

- Statistics

- SQL코테

- t분포

- 그룹바이

- 논문리뷰

Archives

- Today

- Total

HAZEL

[NLP Paper Review] Attention is all you need 논문 리뷰 / transformer 본문

DATA ANALYSIS/Paper

[NLP Paper Review] Attention is all you need 논문 리뷰 / transformer

Rmsid01 2021. 5. 5. 17:06NLP 논문 스터디에서 발표한 내용으로, PPT만 있는 글 입니다.

- 추후에 설명 글도 첨가할 예정 **

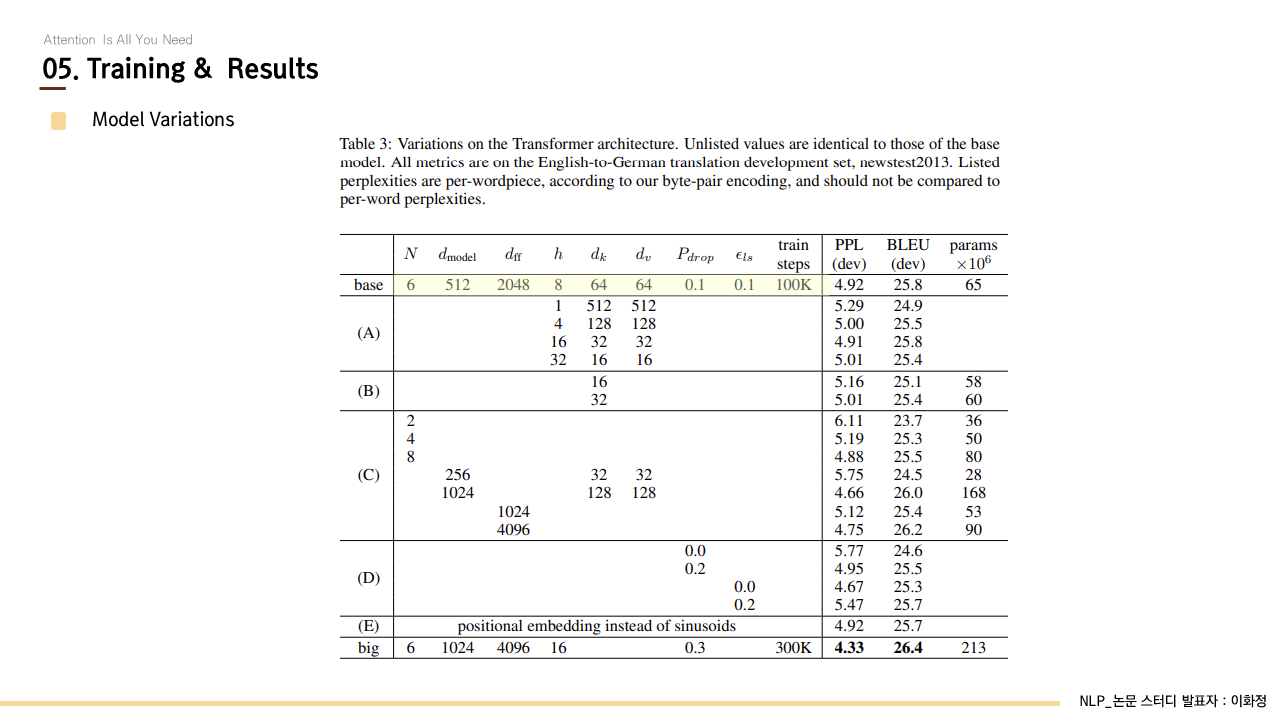

Attention Is All You Need

The dominant sequence transduction models are based on complex recurrent or convolutional neural networks in an encoder-decoder configuration. The best performing models also connect the encoder and decoder through an attention mechanism. We propose a new

arxiv.org

논문 발표 PPT