Notice

Recent Posts

Recent Comments

Link

| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| 15 | 16 | 17 | 18 | 19 | 20 | 21 |

| 22 | 23 | 24 | 25 | 26 | 27 | 28 |

| 29 | 30 |

Tags

- 그룹바이

- MySQL

- sigmoid

- nlp논문

- SQL 날짜 데이터

- 서브쿼리

- LSTM

- 자연어 논문 리뷰

- 자연어처리

- 짝수

- Statistics

- 코딩테스트

- 자연어 논문

- t분포

- 카이제곱분포

- NLP

- Window Function

- update

- leetcode

- inner join

- 표준편차

- torch

- SQL코테

- sql

- 논문리뷰

- 설명의무

- airflow

- CASE

- GRU

- HackerRank

Archives

- Today

- Total

HAZEL

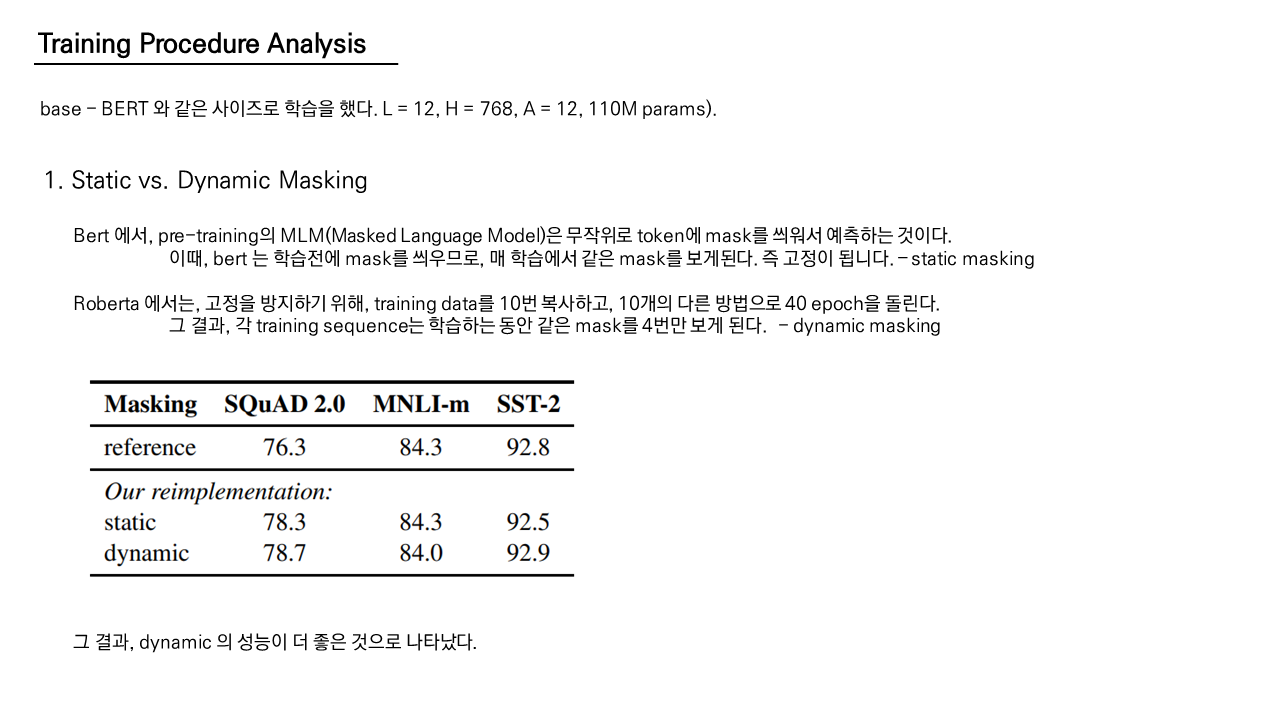

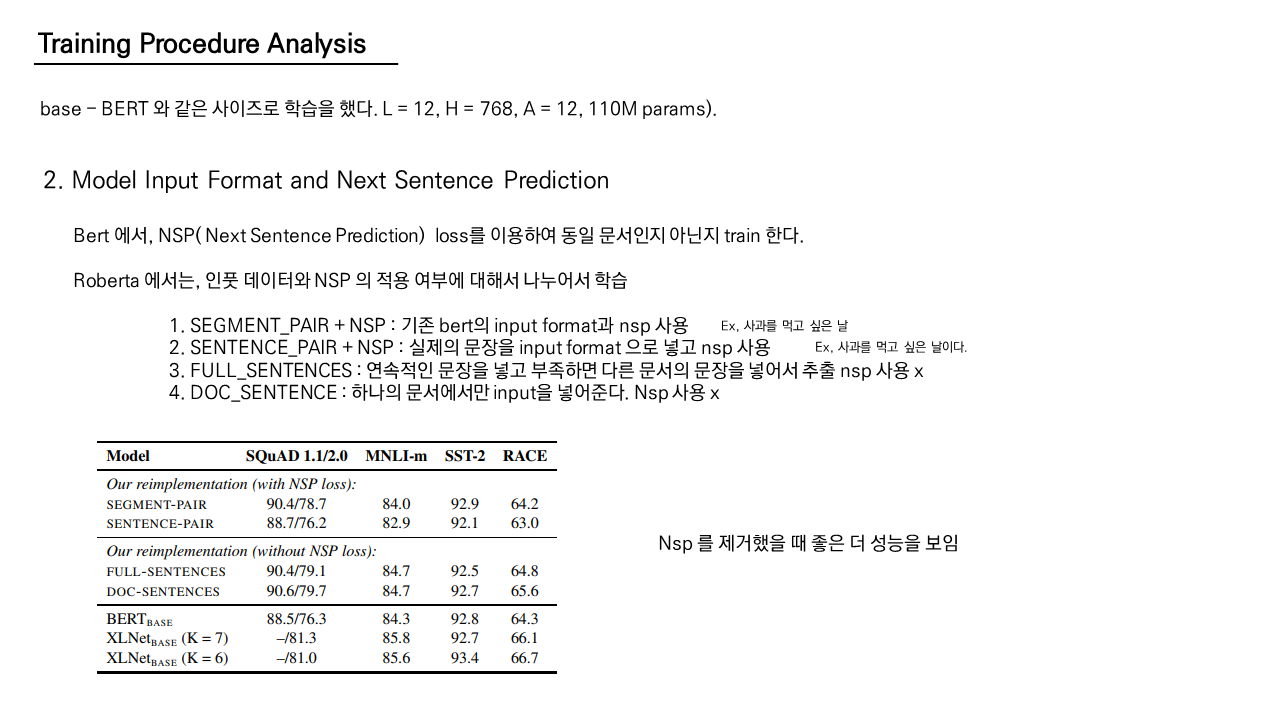

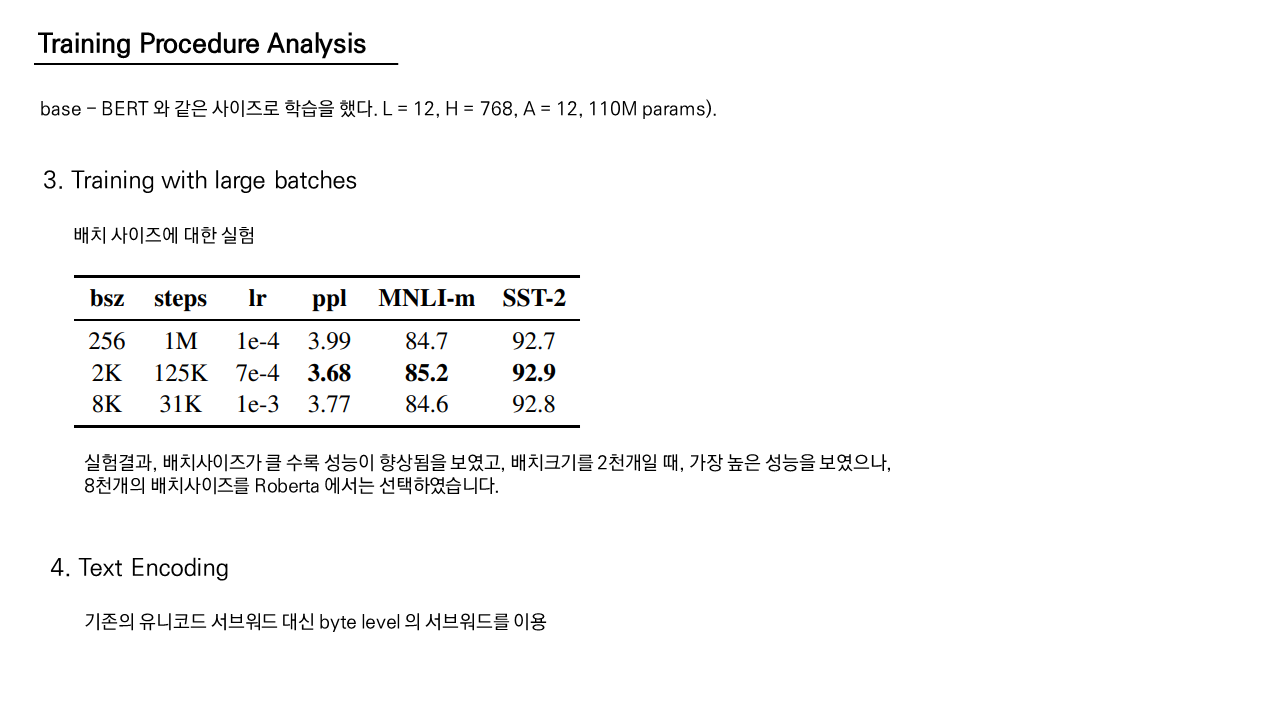

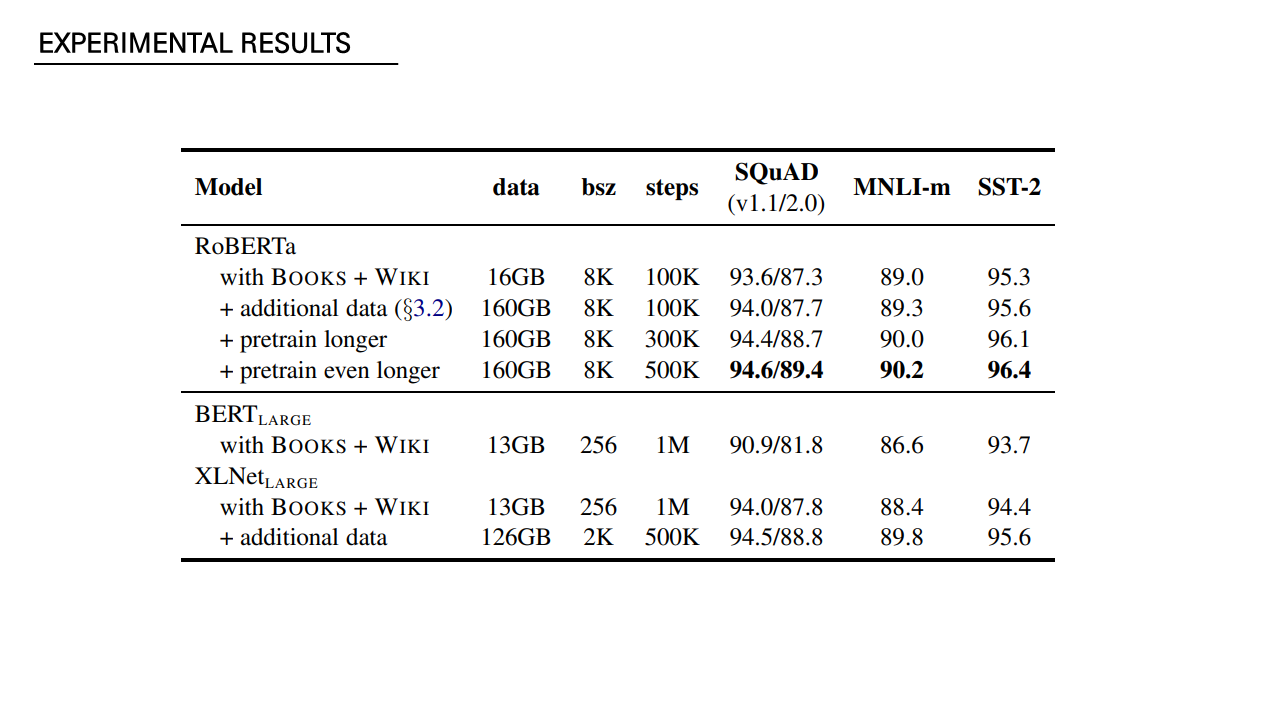

[NLP Paper Review] RoBERTa: A Robustly Optimized BERT Pretraining Approach 논문 리뷰 / RoBERTa 본문

DATA ANALYSIS/Paper

[NLP Paper Review] RoBERTa: A Robustly Optimized BERT Pretraining Approach 논문 리뷰 / RoBERTa

Rmsid01 2021. 10. 29. 23:47https://arxiv.org/abs/1907.11692

RoBERTa: A Robustly Optimized BERT Pretraining Approach

Language model pretraining has led to significant performance gains but careful comparison between different approaches is challenging. Training is computationally expensive, often done on private datasets of different sizes, and, as we will show, hyperpar

arxiv.org

논문 발표 PPT