DATA ANALYSIS/Paper

[NLP Paper Review] Attention is all you need 논문 리뷰 / transformer

Rmsid01

2021. 5. 5. 17:06

NLP 논문 스터디에서 발표한 내용으로, PPT만 있는 글 입니다.

- 추후에 설명 글도 첨가할 예정 **

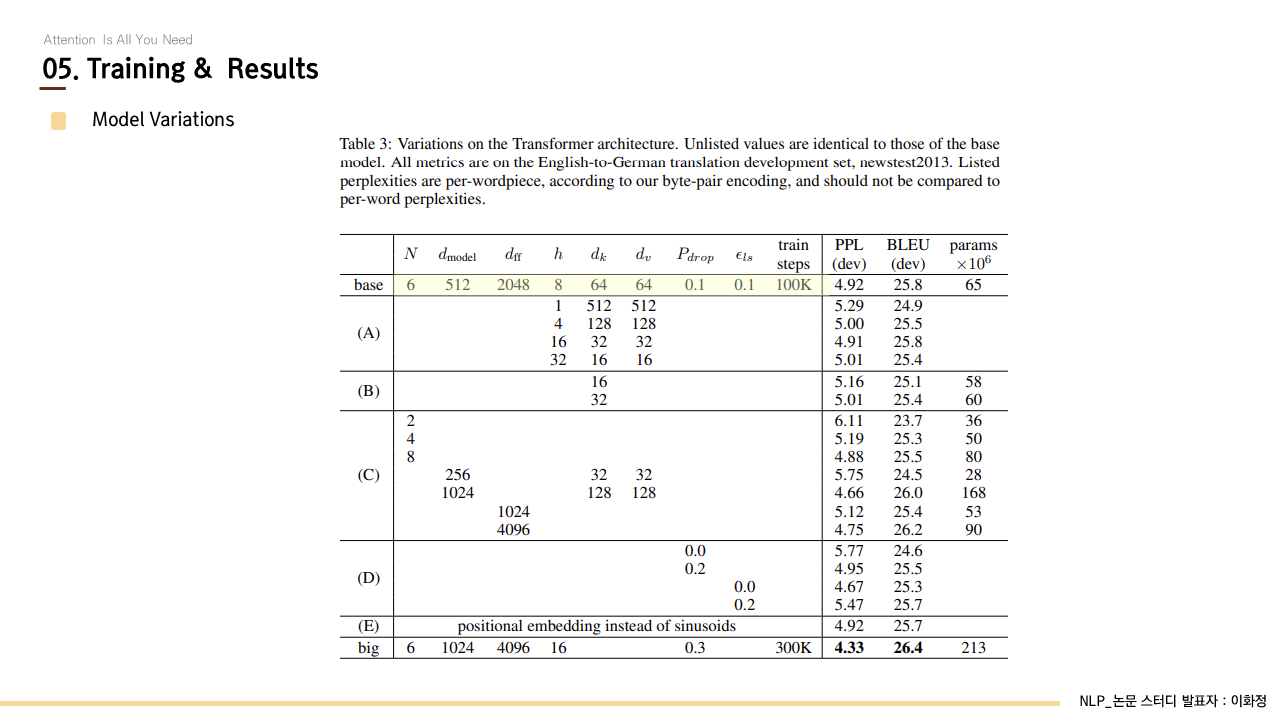

Attention Is All You Need

The dominant sequence transduction models are based on complex recurrent or convolutional neural networks in an encoder-decoder configuration. The best performing models also connect the encoder and decoder through an attention mechanism. We propose a new

arxiv.org

논문 발표 PPT